In this video, Jeremy Howard, former President and Chief Scientist of Kaggle, explains how to host a Machine Learning model on Huggingface Spaces and expose it via a backend API endpoint.

He is also the lead developer at Fast AI, a course teaching students practical AI and ML delevopment.

Furthermore, he teaches how to develop and connect the ML application to a Frontend interface framework named Gradio, which is specifically designed for ML models.

This approach is a great way to quickly put together a PoC or Proof of Concept. I skipped to the exact time in the video where he covers the app's deployment.

The video below explains how to quantize (a technique to reduce the computational and memory costs of running inference) the Llama 2 model to make it run faster on a CUDA enabled Nvidia GPU.👇🏾

Here's a link to the corresponding code repo seen in the video below on GitHub. I tried this method out myself and got some good results. I wrote about it briefly in a LinkedIn post.

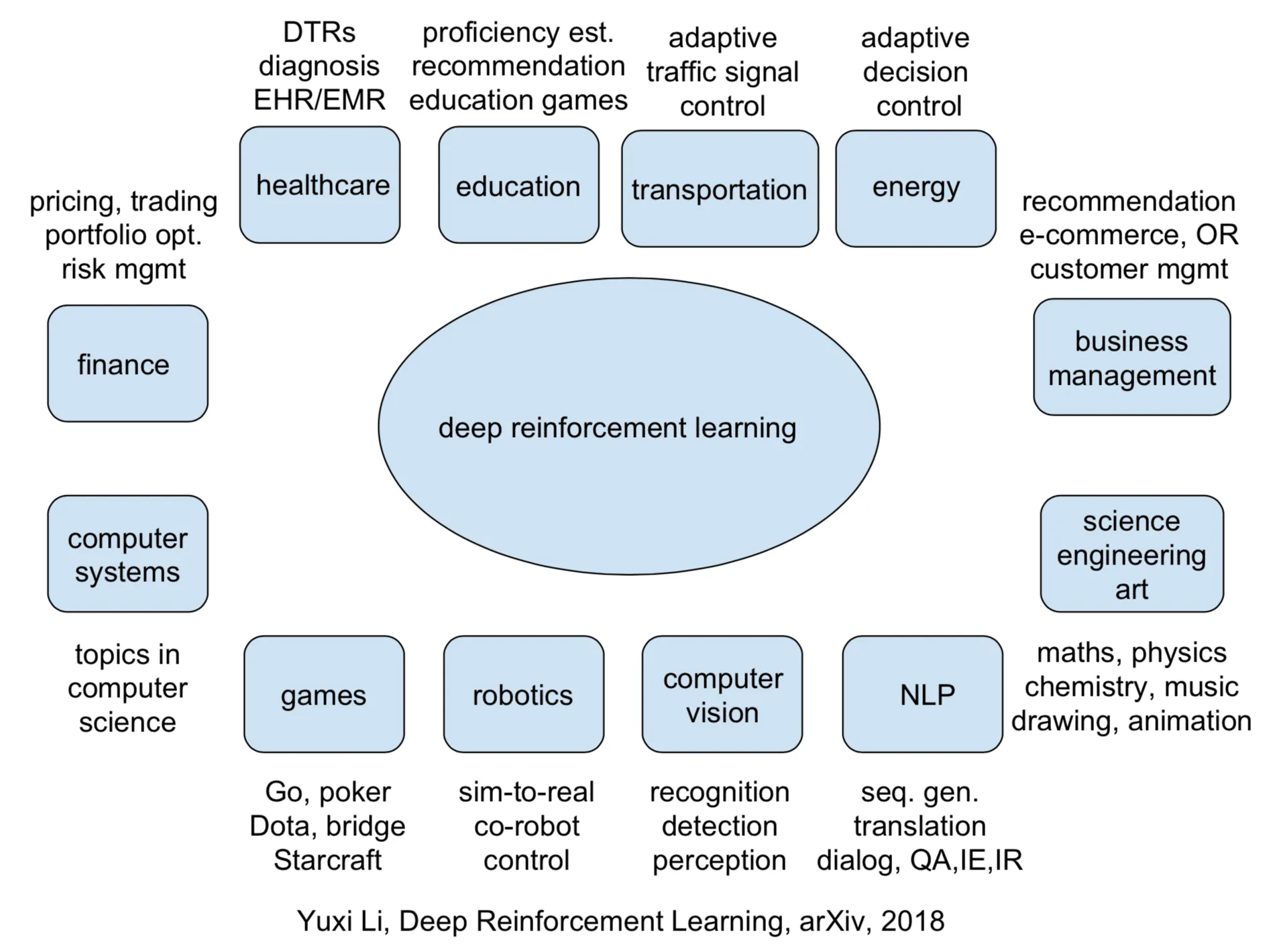

You can learn more about Deep Reinforcement Learning and using it to train ML models for better accuracy from this research paper and this blog post.👇🏾

I would like to see how AI/ML could be applied to Puppeteer to build an automated AI browser actions and testing app.

Finally, I've added a link below to the playlist if you wanna check it out later.